# 安装 Jdk 及 Hadoop在 Linux 下安装 JDK 的步骤如下。

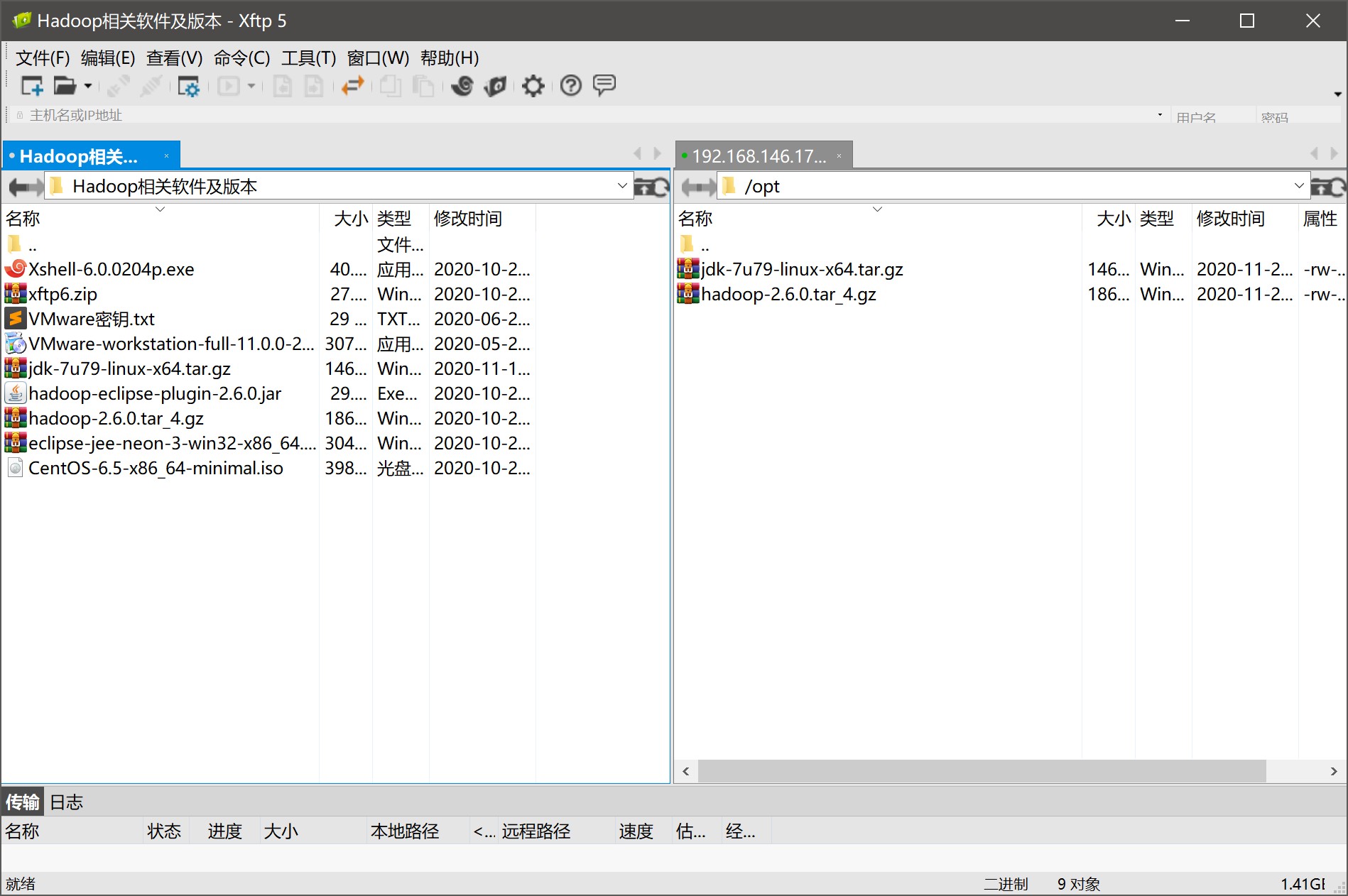

(1)用 Xshell 软件的 Xft 将 JDK 安装包到上传到虚拟机 master,按 “Ctrl+Alt+F” 组合键,弹出文件传输框,把 jdk-7u79-linux-x64.tar.gz 上传到 /opt 目录下,如图 3-1 所示。

(2)进入 /usr 目录,建立文件夹 java,进入 /opt 目录,执行命令 “tar -zxvf jdk-7u79-linux-x64.tar.gz -C /usr/java ”,解压文件到 /usr/java 文件夹。解压jdk软件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 [root@master ~] [root@master opt] total 149916 -rw-r--r--. 1 root root 153512879 Nov 23 02:47 jdk-7u79-linux-x64.tar.gz [root@master opt] [root@master usr] total 64 dr-xr-xr-x. 2 root root 12288 Nov 23 01:44 bin drwxr-xr-x. 2 root root 4096 Sep 23 2011 etc drwxr-xr-x. 2 root root 4096 Sep 23 2011 games drwxr-xr-x. 3 root root 4096 Nov 20 01:57 include dr-xr-xr-x. 9 root root 4096 Nov 20 01:57 lib dr-xr-xr-x. 24 root root 12288 Nov 20 01:58 lib64 drwxr-xr-x. 9 root root 4096 Nov 20 01:58 libexec drwxr-xr-x. 12 root root 4096 Nov 20 01:56 local dr-xr-xr-x. 2 root root 4096 Nov 23 01:44 sbin drwxr-xr-x. 61 root root 4096 Nov 20 01:58 share drwxr-xr-x. 4 root root 4096 Nov 20 01:56 src lrwxrwxrwx. 1 root root 10 Nov 20 01:56 tmp -> ../var/tmp [root@master usr] [root@master usr] [root@master opt] [root@master opt] [root@master usr] [root@master java] total 4 drwxr-xr-x. 8 uucp 143 4096 Apr 11 2015 jdk1.7.0_79

(3)在 /etc/profile 添加修改/etc/profile设置 1 2 export JAVA_HOME=/usr/java/jdk1.7.0_79export PATH=$PATH :$JAVA_HOME /bin

修改/etc/profile设置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@master java] if [ $UID -gt 199 ] && [ "`id -gn`" = "`id -un`" ]; then umask 002 else umask 022 fi for i in /etc/profile.d/*.sh ; do if [ -r "$i " ]; then if [ "${-#*i} " != "$-" ]; then . "$i " else . "$i " >/dev/null 2>&1 fi fi done unset iunset -f pathmungeexport JAVA_HOME=/usr/java/jdk1.7.0_79export PATH=$PATH :$JAVA_HOME /bin[root@master java] [root@master java] java version "1.7.0_79" Java(TM) SE Runtime Environment (build 1.7.0_79-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode) [root@master java]

# 安装 Hadoop(1)进入 /usr 目录,建立文件夹 hadoop,进入 /opt 目录,执行命令 “tar -zxvf jdk-7u79-linux-x64.tar.gz -C /usr/java ”,解压文件到 /usr/java 文件夹。

安装hadoop文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@master ~] [root@master opt] total 341280 -rw-r--r--. 1 root root 195257604 Dec 1 04:21 hadoop-2.6.0.tar_4.gz -rw-r--r--. 1 root root 153512879 Dec 1 04:21 jdk-7u79-linux-x64.tar.gz -rw-r--r--. 1 root root 614088 Dec 1 05:20 ntp-4.2.6p5-12.el6.centos.2.x86_64.rpm -rw-r--r--. 1 root root 80736 Dec 1 05:20 ntpdate-4.2.6p5-12.el6.centos.2.x86_64.rpm [root@master opt] [root@master opt] [root@master opt] [root@master hadoop-2.6.0] total 56 drwxr-xr-x. 2 20000 20000 4096 Nov 14 2014 bin drwxr-xr-x. 3 20000 20000 4096 Nov 14 2014 etc drwxr-xr-x. 2 20000 20000 4096 Nov 14 2014 include drwxr-xr-x. 3 20000 20000 4096 Nov 14 2014 lib drwxr-xr-x. 2 20000 20000 4096 Nov 14 2014 libexec -rw-r--r--. 1 20000 20000 15429 Nov 14 2014 LICENSE.txt drwxr-xr-x. 2 root root 4096 Dec 1 06:05 logs -rw-r--r--. 1 20000 20000 101 Nov 14 2014 NOTICE.txt -rw-r--r--. 1 20000 20000 1366 Nov 14 2014 README.txt drwxr-xr-x. 2 20000 20000 4096 Nov 14 2014 sbin drwxr-xr-x. 4 20000 20000 4096 Nov 14 2014 share [root@master hadoop-2.6.0]

(2) 修改 /etc/profile 文件,并配置环境变量,并测试 hadoop 是否成功安装

hadoop环境变量配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root@master ~] for i in /etc/profile.d/*.sh ; do if [ -r "$i " ]; then if [ "${-#*i} " != "$-" ]; then . "$i " else . "$i " >/dev/null 2>&1 fi fi done unset iunset -f pathmungeexport JAVA_HOME=/usr/java/jdk1.7.0_79export PATH=$PATH :$JAVA_HOME /binexport HADOOP_HOME=/usr/hadoop/hadoop-2.6.0 export PATH=$PATH :$HADOOP_HOME /bin[root@master ~] [root@master ~] Hadoop 2.6.0 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1 Compiled by jenkins on 2014-11-13T21:10Z Compiled with protoc 2.5.0 From source with checksum 18e43357c8f927c0695f1e9522859d6a This command was run using /usr/hadoop/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar [root@master ~]

(3)修改 hadoop 配置文件,需要需改的配置文件(/hadoop 安装目录 /etc/hadoop ),如下表格所示。

1.hadoop-env.sh 配置文件 2.yarn-env.sh 配置文件 3.core.site.xml 配置文件 4.hdfs-site.xml 配置文件 5.mapred-site.xml 配置文件 6.yarn-site.xml 配置文件 7.slaves 配置文件 序号 配置文件名 功能描述 1 hadoop-env.sh 配置 Hadoop 运行所需要的环境变量。 2 yarn-env.sh 配置 YARN 运行所需的环境变量。 3 core.site.xml 集群全局参数,用于定义系统级别的参数,如 HDFS URL、Hadoop 的临时目录等。 4 hdfs-site.xml HDFS 参数,如名称节点和数据节点的存放位置、文件副本的个数、文件读取的权限等。 5 mapred-site.xml MapReduce 参数,包括 Job History Server 和应用程序参数两部分,如 reduce 任务的默认个数、任务所能够使用内存的默认上下限等 6 yarn-site.xml 集群资源管理系统参数,配置 ReourceManager、NodeManager 的通信端口,Web 监控端口等。 7 slave Hadoop 集群所有从节点主机名

hadoop-env.sh :配置 Java 安装路径

hadoop-env.sh配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@master hadoop]# vi hadoop-env.sh # The only required environment variable is JAVA_HOME. All others are # optional. When running a distributed configuration it is best to # set JAVA_HOME in this file, so that it is correctly defined on # remote nodes. # The java implementation to use. export JAVA_HOME=/usr/java/jdk1.7.0_79 #修改的信息 # The jsvc implementation to use. Jsvc is required to run secure datanodes # that bind to privileged ports to provide authentication of data transfer # protocol. Jsvc is not required if SASL is configured for authentication of # data transfer protocol using non-privileged ports. #export JSVC_HOME=${JSVC_HOME}

yarn-env.sh配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root@master hadoop]# vi yarn-env.sh # User for YARN daemons export HADOOP_YARN_USER=${HADOOP_YARN_USER:-yarn} # resolve links - $0 may be a softlink export YARN_CONF_DIR="${YARN_CONF_DIR:-$HADOOP_YARN_HOME/conf}" # some Java parameters export JAVA_HOME=/usr/java/jdk1.7.0_79 # 修改的内容 if [ "$JAVA_HOME" != "" ]; then #echo "run java in $JAVA_HOME" JAVA_HOME=$JAVA_HOME fi if [ "$JAVA_HOME" = "" ]; then echo "Error: JAVA_HOME is not set." exit 1 fi JAVA=$JAVA_HOME/bin/java JAVA_HEAP_MAX=-Xmx1000m

core.site.xml文件配置 1 2 3 4 5 6 7 8 9 10 11 [root@master hadoop]# vi core-site.xml <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://master:8020</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/var/log/hadoop/tmp</value> </property> </configuration>

mapred-site.xml配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@master hadoop]# cp mapred-site.xml.template mapred-site.xml [root@master hadoop]# vi mapred-site.xml <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- jobhistory properties --> <property> <name>mapreduce.jobhistory.address</name> <value>master:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>master:19888</value> </property> </configuration>

yarn-site.xml配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 [root@master hadoop]# vi yarn-site.xml <configuration> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>${yarn.resourcemanager.hostname}:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>${yarn.resourcemanager.hostname}:8030</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>${yarn.resourcemanager.hostname}:8088</value> </property> <property> <name>yarn.resourcemanager.webapp.https.address</name> <value>${yarn.resourcemanager.hostname}:8090</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>${yarn.resourcemanager.hostname}:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>${yarn.resourcemanager.hostname}:8033</value> </property> <property> <name>yarn.nodemanager.local-dirs</name> <value>/data/hadoop/yarn/local</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <property> <name>yarn.nodemanager.remote-app-log-dir</name> <value>/data/tmp/logs</value> </property> <property> <name>yarn.log.server.url</name> <value>http://master:19888/jobhistory/logs/</value> <description>URL for job history server</description> </property> <property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>false</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>2048</value> </property> <property> <name>yarn.scheduler.minimum-allocation-mb</name> <value>512</value> </property> <property> <name>yarn.scheduler.maximum-allocation-mb</name> <value>4096</value> </property> <property> <name>mapreduce.map.memory.mb</name> <value>2048</value> </property> <property> <name>mapreduce.reduce.memory.mb</name> <value>2048</value> </property> <property> <name>yarn.nodemanager.resource.cpu-vcores</name> <value>1</value> </property> </configuration>

slaves配置文件 1 2 3 4 [root@master hadoop]# vi slaves #删除localhost,添加: slave1 slave2